I had a strange idea, why not use grist as an infrastructure monitoring tool?

For an old (inhouse, defunct) monitoring system, i had written some binaries that could do some specific jobs, like query a webpage, ping a system, connect to a tcp port, etc.

All those binaries had in common, that on sucess, they return 0, on error they return 2 and on warning they return 1. Also they would write error messages to the stdout.

For example, to test an tcp port:

./testtcp --url '192.168.0.218' --port '3389'

Or test if a specific string is on a webpage:

./web --containsStr 'Characterization of Selective Autophagy' --url 'https://www.sfb1177.de/' --timeout '10' --code '200'

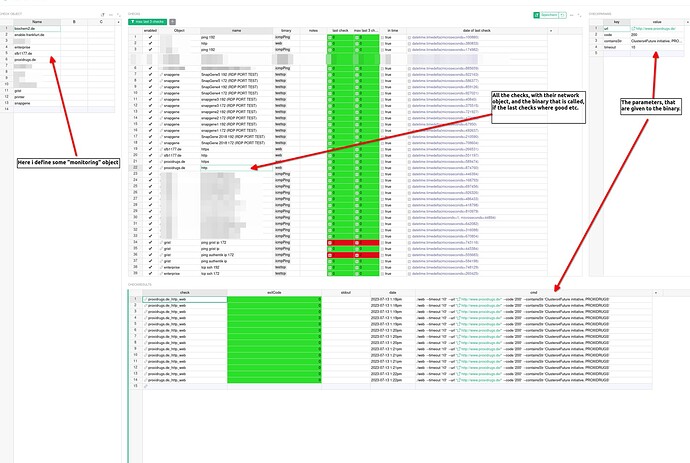

Now, i’ve written a short “daemon” that connects to grist via its api, get all specific checks + their settings,

call the binaries, send the results back to grist, and voila an actually nice system:

I don’t know yet if i want to explore this idea further but so far it actually works quite good.

Edit: The good thing about those small binaries is, you could easily write them, or wrap an existing binary in a shell script, to return those error codes and just call those via the “daemon”, so its easily extendable.

What do you think?

Edit2:

Also the daemon is only 40 lines of Nim code.

import gristapi, json, os, strutils, tables, times

import strformat, osproc, threadpool

var grist = newGristApi(

docId = "<myDocId>",

apiKey = "<myApiKey>",

server = "<myGristServer>"

)

proc doCheck(name, binary, params: string) {.thread.} =

{.gcsafe.}:

let cmd = fmt"./{binary} {params}"

let (output, exitCode) = execCmdEx(command = cmd)

case exitCode

of 0:

echo fmt"[GOOD] {name}"

else:

echo fmt"[BAD!] {name}"

discard grist.addRecords("Checkresults", @[%* {

"check": name,

"exitCode": exitCode,

"stdout": output,

"date": $now(),

"cmd": cmd

}])

let checks = grist.fetchTableAsTable("Checks", filter = %* {"enabled": [true]})

for id, check in checks.pairs:

let name = check["slug"].getStr()

let checkparams = grist.fetchTableAsTable("Checkparams", filter = %* {"check": [id]})

let binary = check["binary"].getStr()

var params = ""

for checkparam in checkparams.values:

let key = checkparam["key"].getStr()

let value = checkparam["value"].getStr()

params &= fmt" --{key} '{value}' "

spawn doCheck(name, binary, params)

sync()